Introduction

This book covers some tech notes / guides / howtos etc. that sometimes I refer to.

Also documenting here in public so I can easily share the reference with others (as opposed to e.g. a gist)

Running bitcoin-core on a VPS

We will install and run bitcoin-core on a VPS to support the bitcoin network.

Since a VPS would typically not have enough resources for the whole 300GB+ blockchain, we will use the pruned blockchain which is ~7GB.

Downloading & installing

Find the download link for the latest bitcoin-core from https://bitcoin.org/en/download

Then, download it to a tmp directory, extract it, and install it:

cd /tmp

wget https://bitcoin.org/bin/bitcoin-core-25.0/bitcoin-25.0-x86_64-linux-gnu.tar.gz

tar xvf bitcoin-25.0-x86_64-linux-gnu.tar.gz

sudo install -m 0755 -o root -g root -t /usr/local/bin bitcoin-25.0/bin/*

Pruning

Before running the bitcoin daemon, setup the bitcoin config to prune the chain:

mkdir -p ~/.bitcoin

echo "prune=550" >> ~/.bitcoin/bitcoin.conf

Running the node

For simplicity, in just a screen, you can run bitcoind

TODO: Service or something

Running a monero node (pruned) on a VPS

Note: The pruned node still needs ~50GB!

Downloading & installing

Monero distributes a tard set of binaries directly, which we will save into /opt.

In a root terminal:

mkdir -p /opt/monero

chown -R ubuntu:ubuntu /opt/monero

Then as regular user:

cd /tmp

wget https://downloads.getmonero.org/linux64

tar -xjvf linux64 --strip-components=1 -C /opt/monero

Run the node in prune mode:

(cd into /opt/monero first...)

./monerod --prune-blockchain --detach

You can check progress by tailing the log:

tail -f ~/.bitmonero/bitmonero.log

Connecting a monero wallet to a node over tor

This assumes you've tor already setup on your machine.

To connect the wallet CLI to the monero network over tor, you can do:

./monero-wallet-cli --proxy 127.0.0.1:9050 --daemon-address lrtrju7tz72422sjmwakygfu7xgskaawiqmfulmssfzx7aofatfkmvid.onion:18089

Here --proxy 127.0.0.1:9050 assumes tor is listening on the default port, and --daemon-address is the remote onion node.

If you're not running your own node, you can find a list at monero.fail

Exposting a local webservice over the internet via Cloudflare Tunnel

Go to Cloudflare Dashboard -> Zero Trust -> Networks -> Tunnels

Create a tunnel via GUI, it will give command to run to install cloudflared and setup the tunnel on the host machine. This step can sometimes take 60-120 seconds! You'll get something like:

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 16.9M 100 16.9M 0 0 13.2M 0 0:00:01 0:00:01 --:--:-- 38.2M

Selecting previously unselected package cloudflared.

(Reading database ... 106916 files and directories currently installed.)

Preparing to unpack cloudflared.deb ...

Unpacking cloudflared (2024.2.0) ...

Setting up cloudflared (2024.2.0) ...

Processing triggers for man-db (2.10.2-1) ...

2024-02-19T12:13:16Z INF Using Systemd

2024-02-19T12:15:05Z INF Linux service for cloudflared installed successfully

Then, again in the Cloudflare Dashboard, just attach it to a subdomain and setup the local port, ezpz!

ntopng

ntopng is a pretty cool tool to view network traffic in real time.

It also performs DPI to extract some metadata about connections you can observe, such as SNI (for TLS), or the infohash (for BitTorrent).

It is especially interesting when run on a firewall / router of sorts.

Installation

We will be using Ubuntu 22.04 LTS in this guide.

1. Add the ntop repository into ubuntu

Inside a sudo shell:

apt-get install software-properties-common wget

add-apt-repository universe

wget https://packages.ntop.org/apt-stable/22.04/all/apt-ntop-stable.deb

apt install ./apt-ntop-stable.deb

2. Install ntop

apt-get clean all

apt-get update

apt-get install ntopng

References

- https://www.ntop.org/guides/ntopng/what_is_ntopng.html#installing-on-linux

- https://packages.ntop.org/apt-stable/

nginx

Running HTTP/3 enabled nginx

For simiplicity, everything here will assume its being done in a root shell.

Building

Installing dependencies

apt-get update

apt-get install build-essential libpcre3 zlib1g libpcre3-dev

Build LibreSSL

cd /tmp

wget https://ftp.openbsd.org/pub/OpenBSD/LibreSSL/libressl-3.9.0.tar.gz

tar xvzf libressl-3.9.0.tar.gz

cd libressl-3.9.0

./configure

make check

make install

Building nginx

cd /tmp

wget http://nginx.org/download/nginx-1.25.4.tar.gz

tar xvzf nginx-1.25.4.tar.gz

cd nginx-1.25.4

./configure \

--with-debug \

--with-http_v3_module \

--with-cc-opt="-I../libressl/build/include" \

--with-ld-opt="-L../libressl/build/lib"

make

Making the config dirs

cd /usr/local

mkdir nginx

cd nginx

mkdir logs

mkdir conf

References

- https://nginx.org/en/docs/quic.html

- https://ftp.openbsd.org/pub/OpenBSD/LibreSSL/

- https://github.com/libressl/portable?tab=readme-ov-file#steps-that-apply-to-all-builds

Setting up TLS and Config

We will now generate our TLS keys and nginx config

Generate TLS key & cert

cd /usr/local/nginx

openssl req -newkey rsa:2048 -keyout domain.key -x509 -days 365 -out domain.crt

Setup the nginx config

Write this to /usr/local/nginx/conf/nginx.conf

events{}

http {

log_format quic '$remote_addr - $remote_user [$time_local] '

'"$request" $status $body_bytes_sent '

'"$http_referer" "$http_user_agent" "$http3"';

access_log logs/access.log quic;

server {

# for better compatibility it's recommended

# to use the same port for quic and https

listen 443 quic reuseport;

listen 443 ssl;

ssl_certificate /usr/local/nginx/domain.crt;

ssl_certificate_key /usr/local/nginx/domain.key;

location / {

# required for browsers to direct them to quic port

add_header Alt-Svc 'h3=":443"; ma=86400';

}

}

}

Make a dummy page

cd /var

mkdir -p www

cd www

mkdir -p html

cd html

echo "Hi" > index.html

Run nginx!

Now you can run nginx and serve your website over HTTP/3:

LD_LIBRARY_PATH=/usr/local/lib/ /tmp/nginx-1.25.4/objs/nginx

TODO

Make tutorial less hacky (LD library preload for LibreSSL, non standard nginx config dir etc.)

Running ECH enabled nginx

ECH is a good way to hide the SNI of the website being connected to.

However, since ECH is not yet an official RFC (Feb 2025) and not many libs support it (especially on the server side), we need to do some hacky stuff to deploy it.

Specifically, we will use openssl & nginx forks by the great people at defo.ie to deploy ECH support.

Building

Environment

Assuming a clean VPS. We're gonna do all our work in a ech directory in the home folder

sudo apt-get install libpcre3 libpcre3-dev

cd ~

mkdir -p ech

Building the OpenSSL fork

This fork adds support for ECH into openssl.

cd ~

mkdir -p code

cd code

git clone -b ECH-draft-13c --single-branch https://github.com/sftcd/openssl.git

cd openssl

./config -d

make -j$(nproc)

Building the nginx fork

This fork uses the forked OpenSSL along with Nginx to support incoming ECH TLS handshakes.

cd ~/code

git clone -b ECH-experimental --single-branch https://github.com/sftcd/nginx.git

cd nginx

./auto/configure --with-debug --prefix=nginx --with-http_ssl_module --with-openssl=$HOME/ech/openssl --with-openssl-opt="--debug"

make -j$(nproc)

Now nginx should be compiled with the ECH compatible OpenSSL!

The binary should be at ~/ech/nginx/objs/nginx

Configs & keys

Config directory

We'll now make a config directory for our ECH keys, TLS key & nginx configuration & data.

cd ~/ech

mkdir -p conf

cd conf

mkdir -p nginx/www

mkdir -p nginx/logs

mkdir -p nginx/conf/echkeydir

mkdir -p nginx/conf/tlskeydir

Generate ECH keys

Next, we'll generate our keys for ECH, using the OpenSSL we compiled with ECH support. The public key is what will be used to encrypt the ClientHello.

Choose any public_name you want apeparing in the OuterSNI (which passive eavesdroppers can see). For instance:

cd ~/ech/conf/nginx/conf/echkeydir

../../../../openssl/apps/openssl ech -public_name cia.gov -pemout cia.gov.pem.ech

This file also contains the ECHCONFIG, which you'll need in the next step to setup DNS records.

Generate TLS keys

These are the keys that will be actually used for the TLS connection with the Inner SNI (real hostname). You'd likely want them signed by a commonly trusted CA, such as Let's Encrypt.

Actually generating the TLS keys is out of scope, but a guide can be found here.

The certificate and key should be placed in

~/ech/conf/nginx/conf/tlskeydir/domain.crt

~/ech/conf/nginx/conf/tlskeydir/domain.key

accordingly.

Deploying

Setup DNS records for ECHConfig

Once you generate the ECH keys, the contents will look something like this:

-----BEGIN PRIVATE KEY-----

[REDACTED]

-----END PRIVATE KEY-----

-----BEGIN ECHCONFIG-----

AD7+DQA6hAAgACAmYD8cK8laATn/iKI1jt79RGkFzoppTl0LVpshC/Q1VQAEAAEAAQALZXhhbXBsZS5jb20AAA==

-----END ECHCONFIG-----

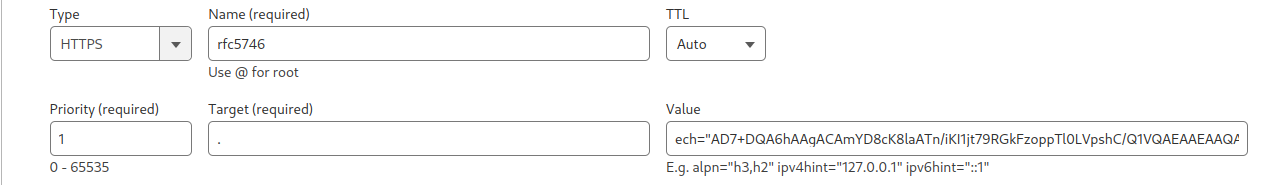

The base64 text is what you need to add to your domains "HTTPS" DNS record.

For example, if your domain name (whatever value you used for public_name in the keygen step doesn't matter) is rfc5746.mywaifu.best , then you need to add a HTTPS record in your DNS, as such:

Note: the value should be: ech="THE BASE 64 ECHCONFIG"

Configure nginx

A sample configuration file could look like this: (nginx-ech.conf):

worker_processes 1;

error_log logs/error.log info;

events {

worker_connections 1024;

}

http {

access_log logs/access.log combined;

ssl_echkeydir echkeydir;

server {

listen 4443 default_server ssl;

ssl_certificate tlskeydir/domain.crt;

ssl_certificate_key tlskeydir/domain.key;

ssl_protocols TLSv1.3;

server_name rfc5746.mywaifu.best;

location / {

root www;

index index.html index.htm;

}

}

}

Replace server_name with whatever your real server (domain) name is, e.g. for me it is rfc5746.mywaifu.best). Now put this config in ~/ech/conf/nginx/conf/nginx.conf .

Run nginx

cd ~/ech/conf

# test configuration

../nginx/objs/nginx -t

# actuall start NGINX

../nginx/objs/nginx

Bonus: Multiple ECHConfigs with different SNIs

If you configure nginx to listen on multiple ports, you can advertise separate ECHConfigs for each port, with their own SNI. Specifically, the ECHConfig advertised in the HTTPS RR follows "Port Prefix Naming" as per Section 2.3 of RFC9460.

Generating a new ECHConfig

Let's say, now instead of example.com, we want to use the SNI cia.gov. Let's first generate an ECHConfig for this domain:

cd ~/ech/conf/nginx/conf/echkeydir

../../../../openssl/apps/openssl ech -public_name cia.gov -pemout cia.gov.pem.ech

Tell NGINX to listen on this port

If we want port 4443 to advertise this ECHConfig and be connectable, we just add a listen directive for this port in our NGINX config:

listen 4443 default_server ssl;

Advertise the ECHConfig

Finally, we need a new DNS HTTPS record for specifically for this port, based on "Port Prefix Naming". So add a new HTTPS record for your domain, with the target as:

_4443._https.rfc5746.mywaifu.best

Note: The new part is _4443._https.! This will tell browsers (or well, more generally ECH capable clients) the ECHConfig to use on this port.

Reload NGINX

cd ~/ech/conf

# test configuration

../nginx/objs/nginx -t

# actuall start NGINX

../nginx/objs/nginx -s reload

Reference

- https://github.com/sftcd/openssl/blob/9e66beb759d274f3069e19cc96c793712e83122c/esnistuff/nginx.md?plain=1#L172

- https://github.com/sftcd/openssl/issues/26

- https://guardianproject.info/2023/11/10/quick-set-up-guide-for-encrypted-client-hello-ech/

CA Signed TLS certificates

Sometimes it can be useful to get TLS certificates signed by a CA. To do this for free, you need a domain name.

Usually ACME clients like certbot will auto-detect a webserver and use that to "serve" the challenge.

However this required pointing the domain to an IP address, which can be persisted in DNS history records. Another way to do it, from ANY machine, is via the DNS challenge.

ACME DNS Challenge

We will use certbot for this. Assuming the domain you want a certificate for is example.com, then the following command will suffice:

certbot -d example.com --manual --preferred-challenges dns certonly

This will ask you to create a DNS TXT record with a certain value, to prove you control the domain name. Once that is done, you will have the signed certificate and private key on your machine, to do whatever you want with.

Wireguard

Server

TODO

Obfuscation

Certain firewalls might try and block outbound wireguard connections to a server.

Typically, wireguard as a protocol is quite easy to identify if some is implementing DPI (Deep Packet Inspection). This is harder to hide. However, if the firewall is somewhat naive, e.g. just looking for the default port or something, there are ways around this.

Offering wireguard on additional ports

Some firewalls may block the known Wireguard port (51820). Even stricter firewalls may just block UDP traffic on all but very few ports.

Typically, port 53 will not be blocked (DNS). Some other more common UDP ports include:

- 123 (NTP - Network Time Protocol)

- 443 (HTTPS over QUIC)

- 1725 (Steam - Gaming software)

- 27015 (Common Counter Strike server port)

If any of these ports are free on your server, you can use IPtables to configure forwarding these ports to the wireguard port transparently, so clients can try connecting on these alternative ports if 51820 is blocked.

No changes are needed on the server's wireguard config.

The iptables rules look like:

iptables -A PREROUTING -d 1.2.3.4/32 -i ens3 -p udp -m multiport --dports 53,123,1725,27015 -j REDIRECT --to-ports 51820 -t nat

Where:

1.2.3.4is the public IP of the server (that wireguard is listening on)ens3is the corresponding53,123,1725,27015is the list of UDP ports you want to use as backups for wireguard

Client

Creating a client config for routing all traffic "via VPN"

In a temporary directory:

wg genkey | tee private.key

cat private.key | wg pubkey | tee public.key

Then, make a config file e.g. wireguard_config.conf :

[Interface]

PrivateKey = [private key just generated]

Address = 10.10.0.3/24 (an IP on the subnet of the server's wireguard interface)

[Peer]

PublicKey = [server public key]

AllowedIPs = 0.0.0.0/0 (all traffic to be routed through this tunnel)

Endpoint = [server public IP address:port]

You then need to add the client as authorized to connect to the server. On the server, run:

wg set wg1 peer [public key just generated] allowed-ips 10.10.0.3

Replace 10.10.0.3 with whatever IP was chosen in the config file for the client above.

To add the config to something like a smartphone, you can qrencode it via:

qrencode -t ansiutf8 < wireguard_config.conf

Self-hosting E-Mail is a fun exercise, and not as daunting as people make it out to be. IP reputation is real, but if you plan to use it for personal use only, it is not too hard to get accepted.

We will use a classic combo of Postfix + Dovecot.

TODO: Explain the roles of postfix / dovecot.

Maintenance

Rotating TLS certs

TLS is used to protect communication on two fronts:

- Postfix (SMTP): via STARTTLS on port 587

- Dovecot (IMAP): via TLS on port 993

We need to generare the certs for the domain on the MX record, e.g. mail.saxrag.com for me. These can be generated following the guide here.

Once rotated, postfix and dovecot need to be restarted. This can be done via:

postfix stop && postfix start

systemctl restart dovecot

You can use openssl to verify the new certs are being offered, e.g. for my domain:

openssl s_client -starttls smtp -connect mail.saxrag.com:587

openssl s_client -connect mail.saxrag.com:993

TLS configs

YOu can find the location of the TLS certs/keys used by postfix/dovecot typically at:

/etc/postfix/main.cf

/etc/dovecot/conf.d/10-ssl.conf

HTTP(S) Proxy

Running an HTTP/S proxy is a useful way to try and achieve a couple of things:

- Hide the original IP address you're making the reqyest from (from the target website)

- Hide the intended website you're connecting to (from ISPs, censors) - only applicable to HTTPS proxies

Note: HTTP(S) proxies work best for HTTP(S) requests, though by using CONNECT it is possible to relay arbitrary TCP traffic as well.

However, proxies are typically configured at the application level. Even when you set an operating system level proxy (e.g. in Android), it is not necessary that an application respects it. If you need all your internet traffic routed securely, you should use a VPN.

Maintenance

Rotating TLS certs

If you use stunnel to host an HTTPS proxy in addition to the HTTP proxy, then from tme to time you would need to rotate the TLS certs.

Generating new certs can be done by following the guide here.

Once rotated, assuming the stunnel.conf points the the location of the new certs, it needs to be restarted

kill -9 $(pgrep stunnel)

stunnel

Installing tor on ubuntu

Basic steps

All these should be done in a root terminal.

1. Install https transport for apt

apt install apt-transport-https

2. Add the tor distribution file in apt sources (i.e. /etc/apt/sources.list.d/tor.list)

First get your distribution name:

$ lsb_release -c

Codename: jammy

Use this in the file:

deb [signed-by=/usr/share/keyrings/tor-archive-keyring.gpg] https://deb.torproject.org/torproject.org jammy main

deb-src [signed-by=/usr/share/keyrings/tor-archive-keyring.gpg] https://deb.torproject.org/torproject.org jammy main

3. Get the tor GPG key

wget -qO- https://deb.torproject.org/torproject.org/A3C4F0F979CAA22CDBA8F512EE8CBC9E886DDD89.asc | gpg --dearmor | tee /usr/share/keyrings/tor-archive-keyring.gpg >/dev/null

4. Install tor!

apt update

apt install tor deb.torproject.org-keyring

5. Verification

curl --proxy socks5h://localhost:9050 icanhazip.com

You can then put the IP into AbuseDB or check on the Tor Relay Metrics page.

You can also view the tor logs via:

journalctl -f -u tor

References

Mostly everything is from the official tor docs.

Installing some programming languages

Some tips on how to setup a VPS or similar with programming languages

Prerequisites

Usually a good idea to have build-essential & pkg-config:

sudo apt-get install build-essential pkg-config

Installing Node.JS

Official website: https://nodejs.org/en/download/package-manager/current

Quick install via n

Github Repo: https://github.com/tj/n

curl -L https://bit.ly/n-install | bash

Installing Rust

Official website: https://www.rust-lang.org/tools/install

Quick install via Rustup

Website: https://rustup.rs/

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

Crypto Stuff

Cryptographic references for PGP, GPG, OpenSSL etc.

DISCLAIMER: This primarily aimed as a reference for myself. It may not be the most secure or best way to do this.

PGP

PGP is great for signing and encryption. Signing is an especially valuable concept, since it has stronger guarantees on the author as opposed to any kind of e.g. social media profile, which by nature have backdoors accessible by staff.

Keygen

We will use keys based on Curve25519. For signing, this means Ed25519, and for encryption X25519.

We will first generate a master ceritification key. The password for this guy should be very strong. For the subsequent subkeys we will change the password later.

gpg --quick-generate-key 'Your Name <email@domain.com>' ed25519 cert never

You should get something like:

pub ed25519 2024-03-25 [C]

224245002A2A0003CD7CF693FFC081E4E8D5FA41

uid Your Name <email@domain.com>

The long hex string is the key fingerprint. We will save it in a temporary variable for generating the subkeys:

export KEYFP=224245002A2A0003CD7CF693FFC081E4E8D5FA41

Now we will generate the subkeys for signing and encryption. Here we will use a 3 year expiration, you can change it to something else if you'd like:

gpg --quick-add-key $KEYFP ed25519 sign 3y

gpg --quick-add-key $KEYFP cv25519 encr 3y

Our keyring should now look like:

$ gpg -K --with-keygrip

sec ed25519 2024-03-25 [C]

224245002A2A0003CD7CF693FFC081E4E8D5FA41

Keygrip = FCC73C8DA31BEB61ECF3B2316131CBF9AFE02675

uid [ultimate] Your Name <email@domain.com>

ssb ed25519 2024-03-25 [S] [expires: 2027-03-25]

Keygrip = 5C407E3653C2BC7C1E4F06BB1179A9A317A88F52

ssb cv25519 2024-03-25 [E] [expires: 2027-03-25]

Keygrip = EBA000BD362AE3CFC512730544B133E7BBA7E217

Now that we generated the subkeys for signing and encryption, we can delete the master key. But we need to first save it, for when we need to generate new keys (e.g. after the current ones expire).

Back up the master key via

$ gpg --armor --export-secret-keys $KEYFP > gpg_master_key.asc

Now, we will delete the master key from our keyring. Go to your private key directory (usually ~/.gnupg), and delete the file matching the keygrip of the sec key. In our case it is FCC73C8DA31BEB61ECF3B2316131CBF9AFE02675.key.

Now when you view your keyring, GPG will print sec# indicating the secret key is not available:

sec# ed25519 2024-03-25 [C]

224245002A2A0003CD7CF693FFC081E4E8D5FA41

Keygrip = FCC73C8DA31BEB61ECF3B2316131CBF9AFE02675

uid [ultimate] Your Name <email@domain.com>

ssb ed25519 2024-03-25 [S] [expires: 2027-03-25]

Keygrip = 5C407E3653C2BC7C1E4F06BB1179A9A317A88F52

ssb cv25519 2024-03-25 [E] [expires: 2027-03-25]

Keygrip = EBA000BD362AE3CFC512730544B133E7BBA7E217

Make sure to save gpg_master_key.asc somewhere encrypted for when you need it!

Updating the password

Now with the master key deleted from your keyring, we can edit the password for the subkeys.

Run gpg -K --keyid-format 0xLONG , and copy the KEYID of your master key. It would look something like:

sec# ed25519/0xFFC081E4E8D5FA41 2024-03-25 [C]

Tell GPG you want to edit the key

gpg --edit-key 0xFFC081E4E8D5FA41

At the gpg> prompt, type passwd . This will prompt you for the current password, which would be the strong password set when generating the master key.

After entering that, it will ask you for a new password. Put in whatever you want.

Finally, at the gpg> prompt, type quit to finish.

References

- https://github.com/drduh/YubiKey-Guide

- https://musigma.blog/2021/05/09/gpg-ssh-ed25519.html

OpenSSL

Some tips and tricks for using OpenSSL.

For more indepth into some patterns of how e.g. ECDH, RSA work, my repo cli-crypto may be more useful.

Generating TLS certificates

RSA

First, we generate and RSA key:

openssl genrsa -out example.com.key 4096

Generate the certificate signing request:

openssl req -key example.com.key -new -out example.com.csr

Self-signing the certificate:

openssl x509 -signkey example.com.key -in example.com.csr -req -days 3650 -out example.com.crt

Elliptic Curve

Basically the same, but we will generate an Ed25519 key instead

openssl genpkey -algorithm ed25519 -out example.com.key

Wireshark

Wireshark is a great tool for inspecting network traffic, with support for a lot of filters and such.

Remote Wireshark

In many cases, it can be useful to view the traffic of a remote machine locally via Wireshark.

Although tcpdump via SSH in the terminal is ok for some initial poking around, the convenicne of Wireshark w/ a GUI, deeper protocol analysis etc. is quite nifty.

There are two ways to do so, depending on tools available. In both, the rudimentary filter not tcp port 22 is used to not show SSH traffic, which otherwise would lead to a positive feedback loop.

using dumpcap

If the remote machine has dumpcap installed, then it can be launched using via the SSH command with output redirected to stdout, which is then piped to Wireshark locally:

wireshark -k -i <(ssh HOSTNAME "dumpcap -P -w - -f 'not tcp port 22'")

using tcpdump

If the remote machine has tcpdump installed, then it can be launched via the following command:

wireshark -k -i <(ssh HOSTNAME "sudo tcpdump -w - -f 'not tcp port 22'")

TLS Inspection

Most programs will use TLS to encrypt their HTTP requests to remote servers, so in Wireshark you'd be unable to view the actual data apart from some TLS metadata.

If the application supports dumping TLS secrets via SSLKEYLOGFILE, then you can instruct wireshark to use this file to decrypt the TLS communcation.

Specifying the keylogfile in wireshark

Navigate to the TLS submenu via:

- Edit -> Preferences -> Protocols -> TLS

Then in the (Pre)-Master-Secret log filename, put the path to the keylogfile, e.g. /tmp/sslkeylogfile.txt

Inspecting Node.JS traffic

Node.JS supports dumping TLS secrets via the --tls-keylog parameter.

To set it as an environment variable, you can do:

export NODE_OPTIONS="--tls-keylog=/tmp/sslkeylogfile.txt"

If a node application then makes a TLS connection using the native TLS libraries in Node.JS, it will log the secrets to the specified file to be inspected via Wireshark.

cURL

cURL respects the SSLKEYLOGFILE environment variable.

So you can just set it to something like

export SSLKEYLOGFILE=/tmp/sslkeylogfile.txt

and then run cURL commands.

Inspecting Chromium

A good way to dump all TLS traffic from chromium, especially useful when trying to debug meta-requests (like DoH), or if there are devtools detectors, is the following:

chromium --ssl-key-log-file=/tmp/sslkeylogfile.txt --disable-http2 --disable-quic

Disabling HTTP/2 & QUIC can make the requests a bit more readable in Wireshark

MiTMing Chromium

In some cases it can be useful to MiTM Chromium instead to be able to change the req/res, especially to control some FE behavior (and when JS debugging is too tedious / unecessary).

Launch mitmproxy , and then launc chromium via:

chromium --proxy-server=http://127.0.0.1:8080 --ignore-certificate-errors

Now in the mitmweb interface we can intercept and modify requests & responses!

Sway

sway is a tiling window manager for Wayland.

Transparent Screenlock

Swaylock used to support alpha channels allowing for transparent screenlock, but unfortunately they removed support for this, since sway (and wayland) planned to drop input-inhibitor.

TODO: Confirm which version removed it

WaylSwayand dropped it in 1.10 as a result of wlroots dropping input-inhibitor in 0.18.0.

However, if you compile swaylock, sway and wlroots in the version that have alpha & input-inhibitor support, you can still use transparent lock.

Compilation Instructions

For sway (and wlroots):

git clone https://github.com/swaywm/sway.git

git checkout 1.9

git clone https://gitlab.freedesktop.org/wlroots/wlroots.git subprojects/wlroots

cd subprojects/wlroots

git checkout 0.17.4

cd ../../

meson build/

meson configure -Dwerror=false

ninja -C build/

For swaylock:

git clone https://github.com/swaywm/swaylock.git

git checkout 1.5 (TBD?)

meson build

Swaylock comment

There is a line which causes compilation warnings, here is a patch:

$ git diff

diff --git a/main.c b/main.c

index c65d68a..a5d2986 100644

--- a/main.c

+++ b/main.c

@@ -1057,7 +1057,7 @@ static int load_config(char *path, struct swaylock_state *state,

char *flag = malloc(nread + 3);

if (flag == NULL) {

free(line);

- free(config);

+ // free(config);

swaylock_log(LOG_ERROR, "Failed to allocate memory");

return 0;

}

AV1 encoding for BluRays

Assuming you've ffmpeg built with SVT-AV1 already. (TODO: Write a guide for this).

These are my settings. They may not work for you, but generically they seem to get the job done for me.

Figuring out the crop

This is especially important for movies, since often they have black bars on the top and bottom. Cropping them out will make encoding faster and waste less space.

Note: Some movies have changing aspect ratios! For instance: The Dark Knight (2008). So be careful.

Using ffmpeg, you can get the cropdetect as such:

ffmpeg -i Filename.mkv -vf cropdetect,metadata=mode=print -f null -

Which will print a lot of messages, looking like:

[Parsed_cropdetect_0 @ 0x69ed680] x1:0 x2:1919 y1:138 y2:941 w:1920 h:800 x:0 y:140 pts:76743 t:76.743000 limit:0.094118 crop=1920:800:0:140

[Parsed_metadata_1 @ 0x69f3f80] frame:1840 pts:76743 pts_time:76.743

[Parsed_metadata_1 @ 0x69f3f80] lavfi.cropdetect.x1=0

[Parsed_metadata_1 @ 0x69f3f80] lavfi.cropdetect.x2=1919

[Parsed_metadata_1 @ 0x69f3f80] lavfi.cropdetect.y1=138

[Parsed_metadata_1 @ 0x69f3f80] lavfi.cropdetect.y2=941

[Parsed_metadata_1 @ 0x69f3f80] lavfi.cropdetect.w=1920

[Parsed_metadata_1 @ 0x69f3f80] lavfi.cropdetect.h=800

[Parsed_metadata_1 @ 0x69f3f80] lavfi.cropdetect.x=0

[Parsed_metadata_1 @ 0x69f3f80] lavfi.cropdetect.y=140

[Parsed_metadata_1 @ 0x69f3f80] lavfi.cropdetect.limit=0.094118

Here, crop=1920:800:0:140 is what you want.

Encoding the video

Since I've built ffav1 as ffmpeg with (and only with) SVT-AV1, I will encode the video independently from audio / subs etc.

Add in the correct cropdetect, or leave it out if there's no cropping.

ffav1 -hide_banner -y -i Filename.mkv -vf crop=1920:800:0:140 -map 0:v:0 -c:v:0 libsvtav1 -preset 6 -svtav1-params tune=0:enable-overlays=1:scm=0 -pix_fmt yuv420p10le -g 120 -b:v:0 5000k -pass 1 -an -f null /dev/null && \

ffav1 -hide_banner -y -i Filename.mkv -vf crop=1920:800:0:140 -map 0:v:0 -c:v:0 libsvtav1 -preset 6 -svtav1-params tune=0:enable-overlays=1:scm=0 -pix_fmt yuv420p10le -g 120 -b:v:0 5000k -pass 2 -an Filename_AV1.mkv

Encoding the audio

First, determine the audio formats present on the source media, using something like mediainfo filename.mkv , which let's say, gives:

Audio #1

ID : 2

Format : MLP FBA 16-ch

Format/Info : Meridian Lossless Packing FBA with 16-channel presentation

Commercial name : Dolby TrueHD with Dolby Atmos

Codec ID : A_TRUEHD

Duration : 2 h 35 min

Bit rate mode : Variable

Bit rate : 3 243 kb/s

Maximum bit rate : 5 472 kb/s

Channel(s) : 8 channels

Channel layout : L R C LFE Ls Rs Lb Rb

Sampling rate : 48.0 kHz

Frame rate : 1 200.000 FPS (40 SPF)

Compression mode : Lossless

Stream size : 3.52 GiB (12%)

Title : Dolby TrueHD/Atmos Audio / 7.1 / 48 kHz / 3243 kbps / 24-bit

Language : English

Default : Yes

Forced : No

Number of dynamic objects : 11

Bed channel count : 1 channel

Bed channel configuration : LFE

Audio #2

ID : 3

Format : AC-3

Format/Info : Audio Coding 3

Commercial name : Dolby Digital

Format settings : Dolby Surround EX

Codec ID : A_AC3

Duration : 2 h 35 min

Bit rate mode : Constant

Bit rate : 448 kb/s

Channel(s) : 6 channels

Channel layout : L R C LFE Ls Rs

Sampling rate : 48.0 kHz

Frame rate : 31.250 FPS (1536 SPF)

Compression mode : Lossy

Stream size : 498 MiB (2%)

Title : Compatibility Track / Dolby Digital EX Audio / 5.1-EX / 48 kHz / 448 kbps

Language : English

Service kind : Complete Main

Default : No

Forced : No

Extracting an existing audio track

In this example, there is already a decent 5.1ch Doby Digital track (AC3) with a decent bitrate (448kb/s). So we can identify it with mkvmerge and then extract it:

$ mkvmerge -i Filename.mkv

File 'Filename.mkv': container: Matroska

Track ID 0: video (AVC/H.264/MPEG-4p10)

Track ID 1: audio (TrueHD Atmos)

Track ID 2: audio (AC-3 Dolby Surround EX)

Track ID 3: subtitles (HDMV PGS)

Track ID 4: subtitles (HDMV PGS)

Track ID 5: subtitles (HDMV PGS)

Track ID 6: subtitles (HDMV PGS)

Track ID 7: subtitles (HDMV PGS)

Track ID 8: subtitles (HDMV PGS)

Track ID 9: subtitles (HDMV PGS)

Track ID 10: subtitles (HDMV PGS)

Track ID 11: subtitles (HDMV PGS)

Track ID 12: subtitles (HDMV PGS)

Track ID 13: subtitles (HDMV PGS)

Chapters: 18 entries

Global tags: 2 entries

And extract the track we want with

$ mkvextract Filename.mkv tracks 2:Filename_audio.ac3

Extracting track 2 with the CodecID 'A_AC3' to the file 'Filename_audio.ac3'. Container format: Dolby Digital (AC-3)

Progress: 100%

Encoding an existing audio track

If the only audio was some form of raw audio (e.g. Dolby TrueHD / DTS-HD), we can encode it to a compressed format (such as DD5.1) using:

ffmpeg -hide_banner Filename.mkv -map 0:a:0 -c:a:0 ac3 -b:a:0 640k Filename_audio.ac3

Extracting the chapters

If the original source had chapters, you can extract them to merge them into the final encode, via:

$ mkvextract Filename.mkv chapters Filename_Chapters.xml

Merging the files together

Once you have the video, audio, subtitles, you can merge them together using something like:

mkvmerge --title "Movie Name (Year)" \

--track-name "0:" Filename_AV1.mkv \

--track-name "0:English (DD5.1)" --language 0:eng Filename_audio.ac3 \

--track-name "0:English (SDH)" --language 0:eng Subtitles.srt \

-o Movie.Year.1080p.BluRay.DD5.1.AV1-GROUP.mkv

Encoding ogg files for iPod

If you use DownOnSpot to download songs, they are encoded with Vorbis and in an OGG container.

iTunes cannot import these (and the iPod can't play them back), so we need to convert them first.

Conversion

tl;dr

ffmpeg -i song.ogg -map_metadata 0:s:a:0 -acodec aac -b:a 320k -aac_pns 0 -movflags +faststart -vn song.m4a

Explanation

We need to encode them with AAC, and store them in an M4A container, for iTunes / iPod to be able to play them back.

The most vanilla way to do this is:

ffmpeg -i song.ogg -c:a aac song.m4a

However, this has a couple of problems:

- Won't get the metadata (e.g. song/album/artist title etc.)

- Regular weird high pitch noises when playing back on an iPod

Metadata

The metadata in the downloaded OGG tracks is stored at the stream level:

$ ffprobe -hide_banner Angels.ogg

Input #0, ogg, from 'Angels.ogg':

Duration: 00:04:25.00, start: 0.000000, bitrate: 328 kb/s

Stream #0:0: Audio: vorbis, 44100 Hz, stereo, fltp, 320 kb/s

Metadata:

title : Angels

album : Life Thru A Lens

artist : Robbie Williams

album_artist : Robbie Williams

track : 4

disc : 1

label : Universal-Island Records Ltd.

date : 1997-01-01

However, the AAC encoder in ffmpeg can only store the metadata at the top level (global metadata). -map_metadata 0:s:a:0 will do this for us, so it is available to iTunes.

AAC Perceptual Noise Substitution

Most iPods do not support the PNS feature in their AAC decoder, which ffmpeg by default uses. This results in tracks that sound fine on all devices except after syncing to the iPod!

To fix this, we need -aac_pns 0 .

References

- https://wiki.archlinux.org/title/IPod

- https://www.reddit.com/r/ipod/comments/r2cti3/squeaking_noises_only_through_the_ipod_and_only/hry0ys5/

Miscellaneous ffmpeg goodies

Encoding Instant Replay to share online

Nvidia has a feature called instant replay that saves x minutes of gameplay. This is saved at a fairly high bitrate. If you want to share a part of it with a friend online, then the file is way too big. Additionally, when sharing online, it is possible to get the context at a much lower resolution (e.g. 720p vs 1440p). Also when gaming on an ultrawide, if only the 16:9 part is relevant, then a crop also helps.

General formula

Ignoring scaling down to 720p and cropping, there are two main codecs that should be used:

- H.264 + AAC, good combo for sharing on Whatsapp etc.

- VP9 + Opus, good combo for sharing on Discord etc.

VP9 is more efficient and will give better results compared to H.264, but slightly less compatibility.

H.264

The general recipe I like to use is:

ffmpeg -i source.mp4 -c:v libx264 -b:v 2000k -c:a aac -b:a 96k clip.mp4

VP9

The general recipe I like to use is:

ffmpeg -i source.mp4 -c:v libvpx-vp9 -b:v 2000k -c:a libopus -b:a 96k clip.webm

Additional filters

To crop from my 3440x1440 ultrawide down to 16:9 (useful when the overlay is ultrawide but actual content is 16:9), I use: -vf crop=2560:1440:440:0

To resize down to 720p, I use: vf scale=1280:-1

Usually I will combine the two, and encode at 1500kbps. If you need to seek to a particular start point, use the -ss flag. And if you only want the next n seconds, use the -t flag. This example starts from the 4 minute mark and takes the next 10 seconds:

ffmpeg -ss 00:04:00 -i "source.mp4" -c:v libvpx-vp9 -b:v 1500k -vf "crop=2560:1440:440:0,scale=1280:-1" -preset veryslow -c:a libopus -b:a 96k -t 10 output.webm